The race for next-gen microchips is on: The AI revolution can’t happen without them

12/06/2017 / By Russel Davis

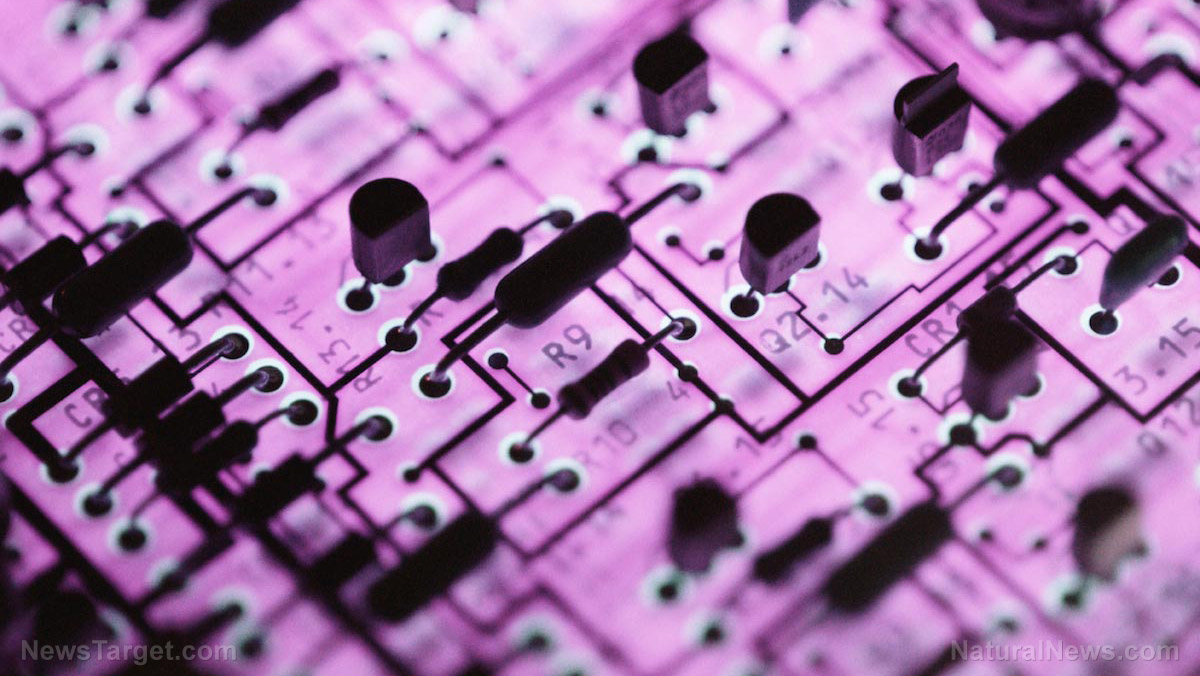

The inevitable artificial intelligence (AI) revolution currently faces big hurdles as today’s microchip capacity has apparently reached its limits. Experts say that while the use of transistors and microchips have allowed people to calculate even the largest of numbers, the way that the brain operates using visual cues and processing these data is way more complex than calculations carried out by conventional math.

According to technology experts, today’s standard microchips will not be able to accommodate the intricate algorithms needed in the development of autonomous cars, planes, and data-intensive programs. The scientists have also cautioned that the coming AI revolution may render Gordon Moore’s Law on integrated circuits, which states that the number of transistors per square inch on integrated circuits doubles at about 18 months — or will be obsolete by the year 2020.

Arati Prabhakar, former director of the Defense Advanced Research Projects Agency (DARPA), stresses that integrated circuits might be difficult to replace. However, the expert has noted that it is possible to achieve a mechanism similar to Moore’s Law by developing chips that can carry out specific tasks.

“There’s a $300 billion-a-year global semiconductor industry that cares deeply about the answer of what comes next. [The integrated circuit is] a computational unit that you could use to do the broadest possible class of problems. If you’re willing to work on specialized classes of problems, you can actually get a lot more out of specialized architectures. Special architectures will give us many more steps forward,” Prabhakar tells Defense One online.

DARPA’s next-generation microchips may usher in the AI era

In line with this, DARPA has recently unveiled its Electronics Resurgence Initiative (ERI) that features several new projects that focus on the development of next-generation chips. One of these projects, called Software Defined Hardware, aims to develop a hardware/software system that enables data-intensive algorithms to operate with the same efficiency as application-specific integrated circuit chips (ASIC) without the cost, development time and limited applications commonly found in the latter. (Related: Military announces “brain chip” allowing humans to plug into a computer.)

Another initiative called Domain-Specific System on a Chip is designed to perform several special functions. The next-generation chip will enable architects to incorporate general and special functions, hardware accelerator co-processors and input/output elements into an easily programmed system or chip that carries out applications within specific technological ranges. Experts say that this approach is reminiscent of Moore’s 1965 discovery, in that the matching and tracking of the same components in integrated structures will eventually lead to the development of differential amplifiers with increased capacities and performance.

“Moore’s Law has guided the electronics industry for more than 50 years. Moore’s Law has set the technology community on a quest for continued scaling and those who have mastered the technology to date have enjoyed the greatest commercial benefits and the greatest gains in defense capabilities. These new ERI investments, in combination with the investments in our current programs and JUMP, constitute the next steps in creating a lasting foundation for innovating and delivering electronics capabilities that will contribute crucially to national security in the 2025 to 2030 time frame,” says Bill Chappell, director of the DARPA’s Microsystems Technology Office

Sources include:

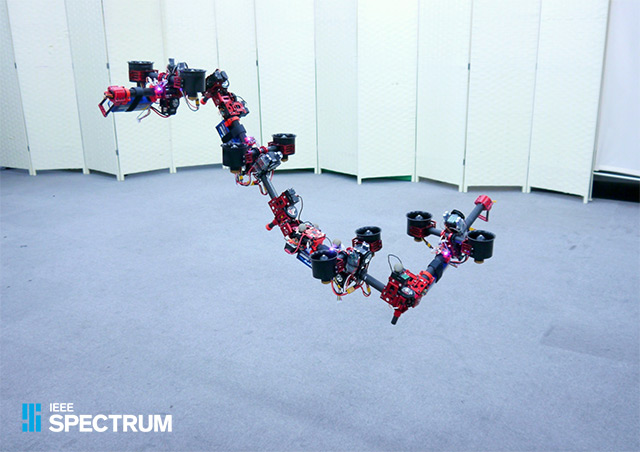

Tagged Under: AI, artificial intelligence, computing, DARPA, electronics capabilities, future tech development, innovation, integrated circuits, inventions, Microchips, Moore's Law, national security, robotics, technology, Transistors